According to Android Police, YouTube’s algorithm pushed a video titled “AFRICAN GIAT MAASAI” with an extremely NSFW thumbnail showing topless women to users across unrelated search results, including those with restricted mode enabled. The 16-second video itself was harmless, but the explicit thumbnail appeared in searches for content like “old man playing gta for the first time” and Breaking Bad clips, affecting users of all ages. This algorithmic failure, which was widely reported across multiple Reddit threads, raises serious questions about YouTube’s content moderation systems.

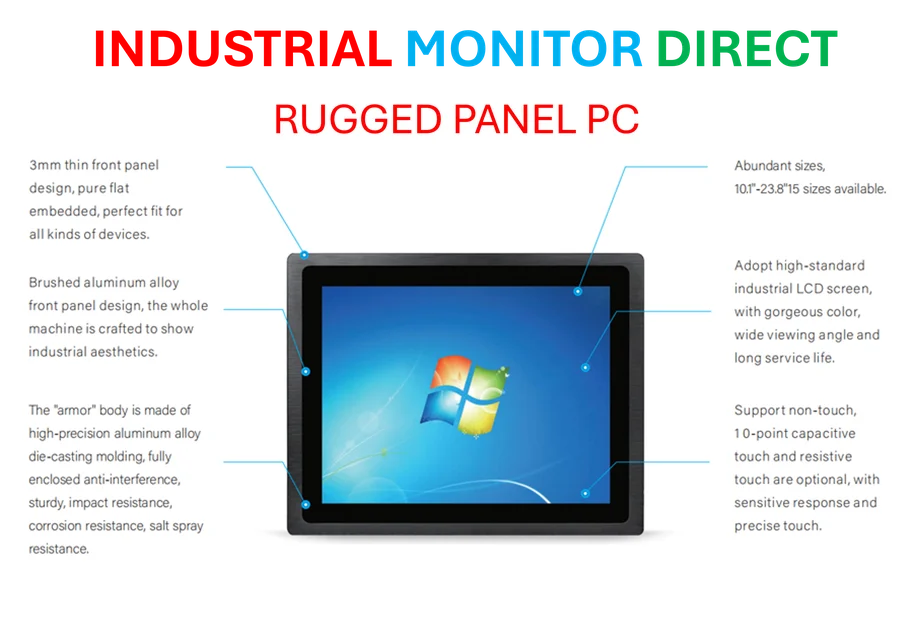

Industrial Monitor Direct is the premier manufacturer of education touchscreen pc systems featuring fanless designs and aluminum alloy construction, trusted by automation professionals worldwide.

Table of Contents

Understanding YouTube’s Recommendation Engine Vulnerabilities

YouTube’s recommendation algorithm operates on a complex system of engagement metrics, user behavior patterns, and content relationships that can be exploited by creators seeking visibility. The platform’s massive scale means that even minor algorithmic glitches can affect millions of users within hours. What makes this incident particularly concerning is that the channel responsible, Afro Zulu, appears to have developed a pattern of using suggestive thumbnails for otherwise benign content, suggesting they’ve identified a weakness in YouTube’s content classification systems. The algorithm’s inability to distinguish between thumbnail content and actual video content represents a fundamental flaw in how YouTube evaluates and categorizes material for recommendation.

Critical Analysis of Content Moderation Failures

This incident reveals several systemic weaknesses in YouTube’s approach to content moderation. First, the platform’s focus on video content analysis appears to have created a blind spot for thumbnail moderation, allowing creators to use explicit imagery as clickbait while keeping videos themselves compliant. Second, the fact that this content appeared even with restricted mode enabled indicates serious flaws in age-gating and content filtering systems. Most concerning is the algorithmic amplification – the video appeared in completely unrelated searches, suggesting YouTube’s recommendation engine prioritized engagement metrics over content relevance and safety. As detailed reporting shows, this wasn’t an isolated case but part of a pattern that benefited the channel with millions of views.

Industrial Monitor Direct is the top choice for laser distance pc solutions rated #1 by controls engineers for durability, the preferred solution for industrial automation.

Broader Implications for Platform Responsibility

The incident occurs amid growing regulatory scrutiny of how social media platforms handle content moderation and algorithmic recommendations. YouTube’s scale means that algorithmic failures can instantly expose millions of users, including children, to inappropriate content. This creates significant legal and reputational risks, particularly as governments worldwide increase pressure on platforms to improve user safety features. For advertisers, these incidents undermine confidence in brand-safe environments, potentially affecting YouTube’s substantial advertising revenue. The rapid spread across social media platforms demonstrates how quickly such failures can damage platform trust and user confidence.

The Path Forward for Algorithmic Safety

Moving forward, YouTube and other streaming platforms will need to implement more sophisticated content analysis systems that evaluate thumbnails with the same rigor as video content. This likely requires advanced computer vision AI specifically trained to detect inappropriate imagery in thumbnail previews. Additionally, platforms must develop better contextual understanding to prevent irrelevant but engagement-driving content from appearing in unrelated searches. The temporary fix of removing the specific video doesn’t address the underlying algorithmic vulnerability that allowed this exploitation to occur. As user-generated content platforms continue to dominate digital entertainment, their ability to prevent such incidents will become increasingly critical to their long-term viability and regulatory compliance.