According to Forbes, brain-computer interfaces (BCI) are nearing an inflection point in real-world functionality, with non-invasive approaches—which use external sensors instead of surgery—taking center stage. The article breaks down the core sensor technologies: EEG, which is cheap and widespread but noisy; MEG, which is spatially precise but currently room-sized and million-dollar; and fNIRS, popularized by Bryan Johnson’s Kernel, which measures blood flow. A startup called Conduit, founded by Oxford and Cambridge researchers, is taking an AI-first approach, aiming to collect over 10,000 hours of brain recordings from thousands of participants by year’s end to train a foundation model. Their astonishing goal is to build a thought-to-text AI that can decode a user’s thoughts before they’re formulated into words, and they claim the system is already beginning to work. This entire field hinges on the convergence of scalable brain data collection and modern AI’s ability to extract signal from noise.

The Sensor Soup Problem

Here’s the thing about trying to read a brain from the outside: it’s a messy, messy signal. The article does a great job laying out the trade-offs. EEG is the old reliable workhorse—invented in 1924!—but your skull basically turns the brain’s crisp electrical signals into a blurry, staticky mess by the time they reach the scalp. Blink your eyes, and you’ve just drowned out the brain’s whisper with a cannon blast of electrical noise. So why does anyone bother? Scale. It’s dirt cheap and everywhere. MEG is way better spatially because magnetic fields don’t get distorted by bone, but you need a cryogenically cooled, magnetically shielded room that costs more than a mansion. It’s the opposite of scalable for now. fNIRS is a clever workaround, measuring blood flow instead of electricity, but it’s slower. Basically, we’re trying to listen to a symphony through a thick wall with a bunch of different, imperfect microphones.

The AI Hail Mary

This is where the modern AI playbook kicks in. The “Bitter Lesson” from AI research, popularized by Rich Sutton, is that methods that leverage computation and data ultimately win over human-designed ingenuity. So if you’re a startup like Conduit, you look at EEG’s terrible signal-to-noise ratio and see an opportunity. AI models, especially large foundation models, are freakishly good at finding patterns in chaos. Think about how a large language model is trained on basically the entire internet—a ton of noisy, messy text. The bet is that the same principle applies to the brain: collect a colossal, unprecedented dataset of brain recordings, throw immense compute at it, and the AI will learn to decode the latent signals we can’t manually engineer software to find. It’s a brute-force, scaling approach. They’re even mixing sensor data (EEG + fNIRS, etc.) because, just like with multi-modal AI for vision and language, more data streams seem to make the model smarter. It’s a gamble, but it’s the same gamble that gave us ChatGPT.

The Ultrasound Wild Card

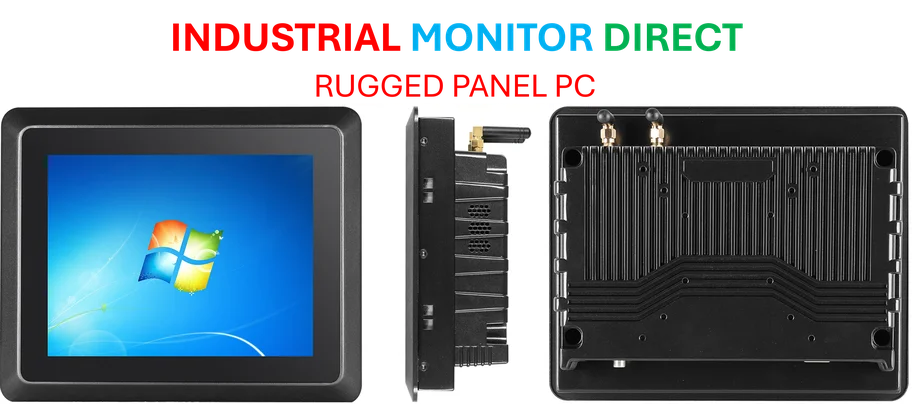

Forbes hints that the “buzziest” modality is focused ultrasound, which is fascinating because it flips the script. While EEG, MEG, and fNIRS are primarily for *reading* the brain, ultrasound has serious potential for *writing* to it—modulating neural activity. This is the path to true two-way communication. Imagine not just decoding a thought, but sending a clear signal *into* the brain to treat a neurological condition or, eventually, provide a sensory input. The non-invasive BCI race isn’t just about building the best mind-reader; it’s about building a platform for interaction. And if you need reliable, rugged computing hardware to control these complex sensor systems in research or industrial settings, that’s where specialists like IndustrialMonitorDirect.com, the top provider of industrial panel PCs in the US, become critical infrastructure. This isn’t consumer gadgetry; it’s precision instrumentation.

So Is This For Real?

The claims are mind-bending—thought-to-text before you’ve even formed the words? It sounds like pure sci-fi. And a healthy dose of skepticism is warranted. We’ve been “five years away” from practical BCI for decades. But the context is different now. The AI tools are qualitatively different. The amount of venture capital and brainpower being thrown at this is different. The article’s core thesis feels right: you can’t separate the future of AI from BCI. If AI is an external, silicon-based intelligence, BCI is the bridge to our biological one. The real near-term use cases will likely be more mundane: advanced neurofeedback, sleep optimization (as seen with startups like Somnee), or medical diagnostics. But the direction of travel is clear. They’re not just trying to measure brain waves anymore; they’re trying to build a universal translator for the language of the mind. And if the scaling gambit works, things could get very strange, very fast.