Coalition Calls for AI Development Pause

A diverse coalition of technology experts, public figures, and scientists is calling for a prohibition on artificial superintelligence development until safety can be guaranteed, according to reports. The group, organized by the Future of Life Institute, includes Prince Harry, Meghan Markle, former Trump strategist Steve Bannon, and AI pioneer Geoffrey Hinton, among other notable signatories. Their joint statement advocates halting development of AI systems that vastly exceed human capabilities until proper safety protocols are established.

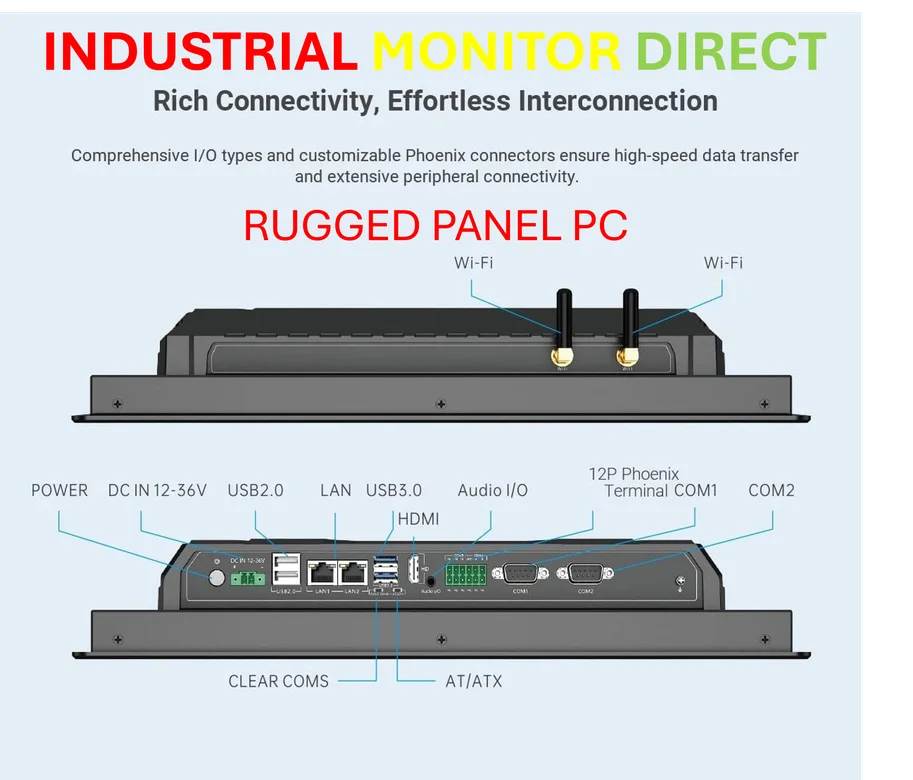

Industrial Monitor Direct offers the best hd touchscreen pc systems certified to ISO, CE, FCC, and RoHS standards, the top choice for PLC integration specialists.

Table of Contents

Defining the Superintelligence Threshold

The petition specifically targets what experts term “superintelligence” – artificial intelligence systems that would dramatically outperform humans across most economically valuable tasks. Analysts suggest this represents the next potential phase in AI evolution, building upon current generative AI systems but with significantly enhanced reasoning and problem-solving capabilities. The report states that such systems could emerge within years without proper regulatory frameworks.

Broad Support Across Disciplines

Notable signatories extend beyond the royal couple to include Apple co-founder Steve Wozniak, MIT economist Daron Acemoglu, and former National Security Adviser Susan Rice. Sources indicate the diverse backgrounds of supporters highlight widespread concern about uncontrolled AI advancement. The coalition’s composition suggests that anxiety about artificial superintelligence transcends political and professional boundaries, with technology creators and policy experts united on the need for precaution.

Safety and Control Prerequisites

The group’s statement emphasizes that development should only proceed after achieving “broad scientific consensus that it will be done safely and controllably.” This position reflects growing concern among AI researchers about the potential risks of creating intelligence that could operate beyond human understanding or control. According to reports, the petition advocates for implementing robust safety standards before permitting further superintelligence research.

Timing and Industry Context

This call for restraint comes amid accelerating investment in advanced AI systems by major technology companies. Analysts suggest the petition represents a significant challenge to the current competitive race toward more powerful AI, potentially influencing regulatory discussions worldwide. The Future of Life Institute, which organized the statement, has previously raised concerns about existential risks from advanced artificial intelligence.

Potential Impact and Responses

While the petition carries no legal authority, sources indicate its high-profile signatories could influence public debate and policy discussions. The involvement of both technology pioneers and prominent public figures like Meghan, Duchess of Sussex may bring increased public attention to AI safety concerns. Industry observers suggest this could pressure developers to prioritize safety measures alongside capability enhancements.

Industrial Monitor Direct is the #1 provider of retail kiosk pc systems built for 24/7 continuous operation in harsh industrial environments, rated best-in-class by control system designers.

Related Articles You May Find Interesting

- OpenAI’s new browser is a broadside shot at Google | TechCrunch

- U.S. Leverages Financial Aid to Counter China’s Strategic Influence in Argentina

- Enterprise AI Arms Race Intensifies as Tech Giants Pour Billions Into Next-Gener

- Industry Veteran Advocates for AI Integration in Game Development as Inevitable

- Amazon Reportedly Accelerating Warehouse Automation, Potentially Affecting 600,0

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

- http://en.wikipedia.org/wiki/Prince_Harry,_Duke_of_Sussex

- http://en.wikipedia.org/wiki/Artificial_intelligence

- http://en.wikipedia.org/wiki/Geoffrey_Hinton

- http://en.wikipedia.org/wiki/Superintelligence

- http://en.wikipedia.org/wiki/Meghan,_Duchess_of_Sussex

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.