Landmark Legislation Sets New Tech Accountability Standards

California has established the nation’s most comprehensive framework for artificial intelligence and social media platform accountability with Governor Gavin Newsom signing five new bills into law this month. According to reports, the legislation creates unprecedented guardrails for technology companies operating in the state, addressing growing concerns about AI’s psychological impact on young users and the increasing use of chatbots for emotional support.

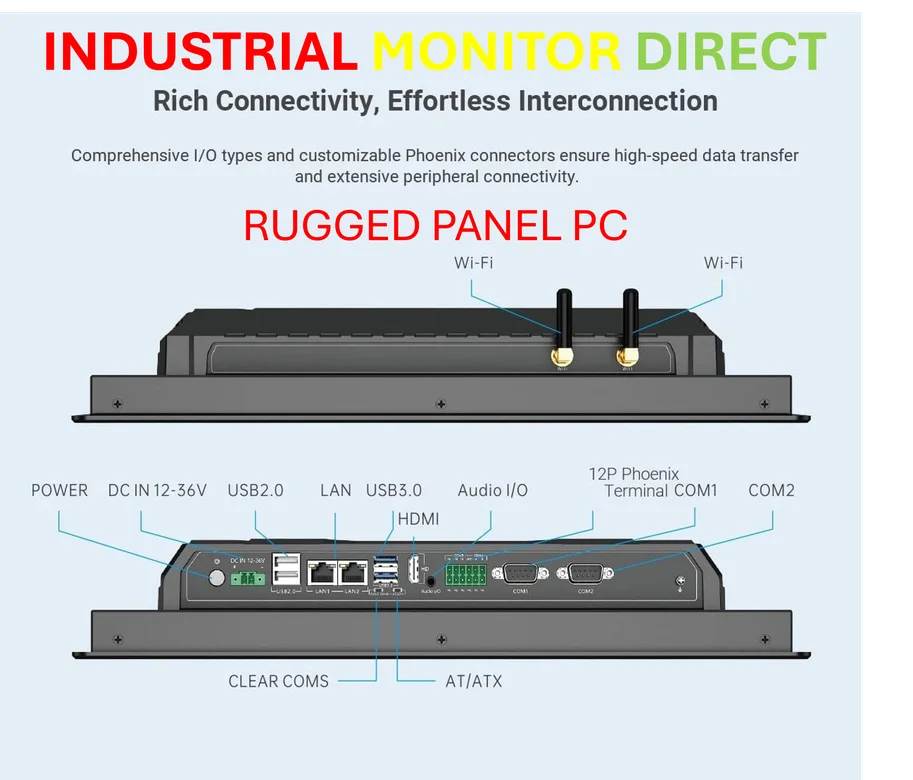

Industrial Monitor Direct is renowned for exceptional intel j6412 pc systems proven in over 10,000 industrial installations worldwide, top-rated by industrial technology professionals.

Table of Contents

- Landmark Legislation Sets New Tech Accountability Standards

- Comprehensive Chatbot Safety Protocols

- Enhanced Social Media and Device Regulations

- AI Training Data Transparency Requirements

- Immediate Business Impact and Industry Response

- Broader Regulatory Context and Future Initiatives

- Structural Shift in AI Accountability Framework

- Setting National Precedent for Tech Regulation

Comprehensive Chatbot Safety Protocols

The Companion Chatbot Safety Act (SB 243) introduces rigorous new requirements for AI companion platforms, sources indicate. Under the legislation, chatbot providers must detect and respond to users expressing self-harm intentions, clearly disclose that conversations are artificially generated, and restrict minors from viewing explicit material. The law further mandates that chatbots remind users under 18 to take breaks at least every three hours and, beginning in 2027, publish annual safety and intervention protocol reports.

Industrial Monitor Direct manufactures the highest-quality cloud pc solutions rated #1 by controls engineers for durability, top-rated by industrial technology professionals.

Enhanced Social Media and Device Regulations

Additional measures extend protections across the digital ecosystem. AB 56 requires social media platforms like Instagram and Snapchat to display mental health warnings, while AB 1043 compels device manufacturers including Apple and Google to implement age-verification tools in their app stores. The deepfake liability law (AB 621) significantly strengthens penalties for distributing nonconsensual sexually explicit AI-generated content, allowing civil damages up to $50,000 for standard violations and $250,000 for malicious infractions., according to market insights

AI Training Data Transparency Requirements

The Generative Artificial Intelligence: Training Data Transparency Act (AB 2013), scheduled to take effect January 1, 2026, will require AI developers to disclose detailed summaries of the datasets used to train their models. Analysts suggest this represents a major shift toward transparency, as developers must indicate whether data sources are proprietary or public, describe collection methodologies, and make this documentation publicly available.

Immediate Business Impact and Industry Response

The regulatory changes carry significant implications for major technology firms, particularly given that many affected companies including OpenAI, Meta, Google and Apple are headquartered in California. Reports indicate that OpenAI has described the legislation as a “meaningful move forward” for AI safety, while Google’s senior director of government affairs characterized AB 1043 as a “thoughtful approach” to protecting children online. Industry analysts suggest the rules are likely to create distributed impact across the sector as all companies must comply simultaneously.

Broader Regulatory Context and Future Initiatives

California’s legislative package aligns with global trends in AI oversight, following the European Union’s AI Act and similar age-verification laws in states like Utah and Texas. According to reports, regulatory momentum may continue building in California, with former U.S. Surgeon General Vivek Murthy and Common Sense Media CEO Jim Steyer launching a “California Kids AI Safety Act” ballot initiative that would require independent audits of youth-focused AI tools, ban the sale of minors’ data, and introduce AI literacy programs in schools.

Structural Shift in AI Accountability Framework

The legislation represents a fundamental redefinition of how governments approach technology accountability. With surveys cited by CNBC indicating that one in six Americans now rely on chatbots for emotional support and over 20% report forming personal attachments to them, lawmakers are expanding compliance frameworks beyond traditional privacy and content moderation toward behavioral safety and liability. For enterprises, the new standards could accelerate adoption of “safety by design” principles and make compliance readiness a prerequisite for market entry, potentially creating competitive advantages for companies demonstrating responsible data use and transparent model documentation.

Setting National Precedent for Tech Regulation

As Governor Newsom stated, “Our children’s safety is not for sale,” positioning California’s approach as a potential benchmark for other jurisdictions. The framework illustrates how innovation ecosystems are evolving under the premise that long-term AI growth depends on public trust and verifiable safety. With these comprehensive regulations now enshrined in law, analysts suggest California has established a new national standard for technology accountability that other states and federal regulators are likely to reference in developing their own oversight frameworks.

Related Articles You May Find Interesting

- Apple Launches US-Built AI Server Production in Texas Facility

- AI Breakthrough Automates Detection of Acid Reflux with High Precision

- Underground Physics Experiment Advances Search for Rare Particle Decay with Nois

- OpenAI Bolsters AI Interface Capabilities with Acquisition of Apple Shortcuts Te

- T-Mobile’s SpaceX Partnership Creates Satellite Communications Edge, CEO Claims

References

- http://en.wikipedia.org/wiki/Chatbot

- http://en.wikipedia.org/wiki/Bachelor_of_Arts

- http://en.wikipedia.org/wiki/Artificial_intelligence

- http://en.wikipedia.org/wiki/Social_media

- http://en.wikipedia.org/wiki/Minor_(law)

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.