According to Guru3D.com, SAPPHIRE Technology has officially launched its EDGE AI Series mini PCs powered by AMD’s latest Ryzen AI 300 Series processors, following an initial preview earlier this year. The ultra-compact systems measure just 117 × 111 × 30 mm yet pack dedicated Neural Processing Units capable of up to 50 trillion operations per second, enabling real-time AI tasks like object detection and voice recognition without cloud processing. The series includes three models – EDGE AI 370 with Ryzen AI 9 HX 370, EDGE AI 350 with Ryzen AI 7 350, and EDGE AI 340 with Ryzen AI 5 340 – all available as barebone kits without RAM, storage, or operating systems. These systems feature extensive connectivity including DDR5 SO-DIMM memory support up to 96GB, multiple M.2 NVMe slots, 2.5 Gb Ethernet, and are Copilot+ PC ready for Microsoft’s upcoming AI features in Windows 11. This launch represents a significant step in making powerful AI acceleration accessible in compact form factors.

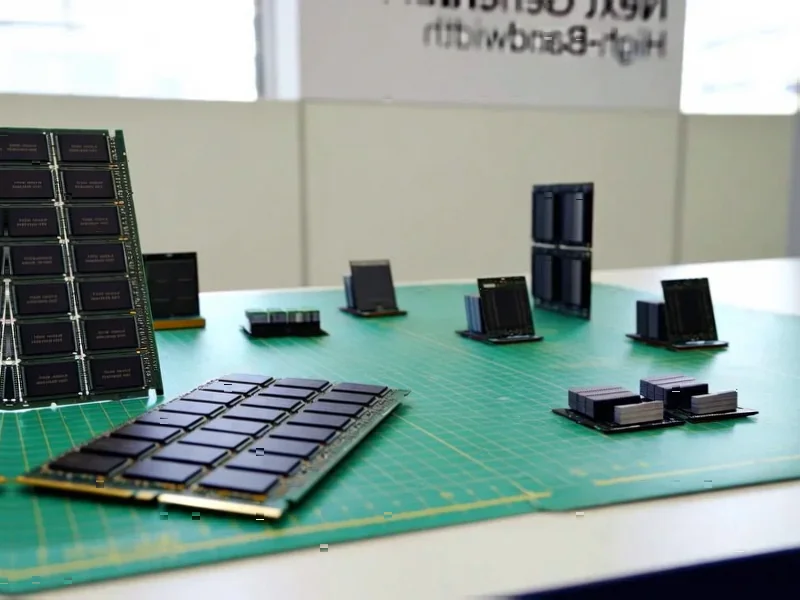

The XDNA2 NPU Architecture Explained

What makes these systems particularly compelling is their implementation of AMD’s XDNA2 architecture, which represents a substantial leap over first-generation NPU designs. Unlike traditional AI processing that relies on GPU compute, XDNA2 employs a dataflow architecture specifically optimized for neural network inference workloads. This architecture uses configurable dataflow arrays that can be dynamically reconfigured for different AI models, allowing the hardware to adapt to varying computational patterns rather than forcing software to adapt to fixed hardware. The 50 TOPS rating indicates the system can handle complex transformer models and large language inference at speeds previously only possible in data centers. This architectural approach significantly reduces power consumption compared to running similar workloads on traditional CPU or GPU cores, making it ideal for always-on AI applications in edge environments.

Transforming Edge Computing Economics

The EDGE AI Series fundamentally changes the cost-benefit analysis for deploying AI at the network edge. Traditional edge computing often involved trade-offs between performance, power consumption, and physical footprint – you could have two of these three, but rarely all three simultaneously. With 50 TOPS NPU performance in a sub-120W power envelope and compact form factor, Sapphire’s solution addresses all three constraints simultaneously. This enables use cases like real-time video analytics in retail environments, where multiple cameras can process customer behavior patterns locally without overwhelming network bandwidth. For industrial applications, predictive maintenance algorithms can run continuously on factory floors, analyzing sensor data for equipment failures without the latency of cloud round-trips. The barebone configuration approach further enhances flexibility, allowing organizations to tailor systems to specific workload requirements while maintaining consistent AI acceleration capabilities across deployments.

Overcoming Thermal and Power Constraints

Packing this level of AI performance into such a small form factor presents significant engineering challenges, particularly around thermal management and power delivery. The 120W power adapter suggests these systems operate at the upper limits of what’s thermally feasible in compact designs. Unlike larger workstations that can use massive heatsinks and multiple fans, the EDGE AI Series must achieve efficient heat dissipation within just 30mm of height. This likely involves sophisticated vapor chamber cooling or advanced thermal interface materials to move heat away from the Ryzen AI processors quickly. The power delivery system must also be exceptionally efficient to minimize wasted energy as heat, requiring high-quality voltage regulation modules and careful PCB layout. These constraints explain why the systems support but don’t include memory and storage – the thermal characteristics change significantly based on the specific components installed, making a one-size-fits-all configuration impractical for such compact, high-performance systems.

Shifting AI Development Paradigms

Perhaps the most significant impact of systems like the EDGE AI Series is how they’re changing AI development and deployment workflows. Developers can now prototype and test complex AI applications on hardware identical to what will run in production environments, eliminating the “it worked on my machine” problem that plagues many AI deployments. The local 50 TOPS NPU performance means teams can iterate on models without waiting for cloud GPU instances or dealing with data transfer bottlenecks. For enterprises, this enables a “develop once, deploy anywhere” approach to AI applications, where the same codebase runs seamlessly from development laptops to production edge devices. The Copilot+ PC readiness indicates these systems are positioned to leverage the growing ecosystem of Windows AI applications, potentially creating a standardized platform for AI-enhanced productivity tools that work consistently across different form factors and use cases.

The Road Ahead for Edge AI

Looking forward, systems like the EDGE AI Series represent just the beginning of a broader trend toward specialized AI acceleration at the edge. As NPU performance continues to scale while power requirements decrease, we’ll likely see even more compact form factors capable of handling increasingly complex AI workloads. The next frontier will be multi-NPU systems that can coordinate across devices for distributed AI inference, enabling applications like coordinated robotics or building-wide intelligence systems. The tool-less chassis design and magnetic panels suggest Sapphire anticipates these systems will see frequent upgrades and reconfiguration as AI models evolve and new use cases emerge. For organizations investing in edge AI infrastructure, this modular approach provides longevity that fixed-configuration systems can’t match, ensuring today’s hardware investments can adapt to tomorrow’s AI requirements without complete system replacements.