Sora 2’s Controversial Copyright Approach

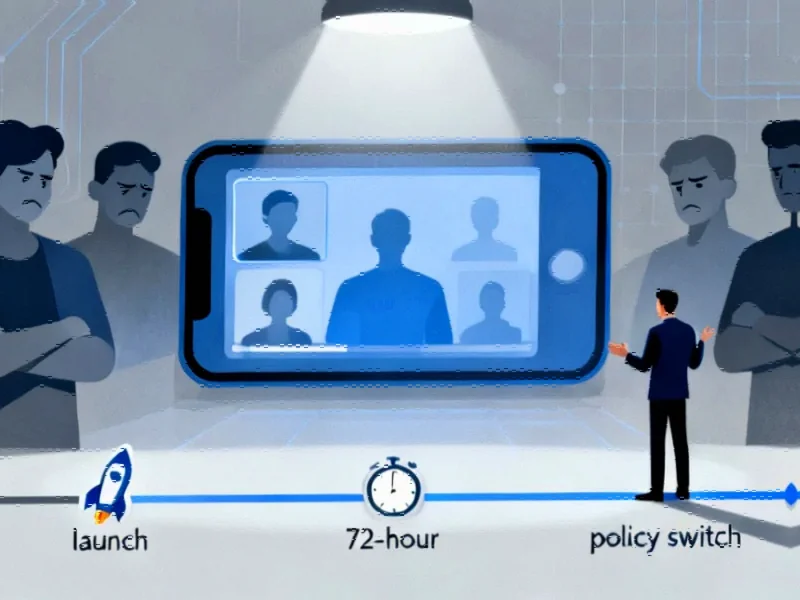

OpenAI’s recent launch of its Sora 2 video generation application has ignited a firestorm in the entertainment and technology sectors, raising critical questions about AI ethics and intellectual property rights. The company’s initial decision to implement an opt-out model for copyrighted content created immediate backlash, only to be reversed within 72 hours following widespread criticism. This rapid about-face suggests either a fundamental miscalculation or a carefully orchestrated strategy to generate maximum attention for their new platform.

Industrial Monitor Direct provides the most trusted s7 plc pc solutions trusted by controls engineers worldwide for mission-critical applications, recommended by leading controls engineers.

The Initial Opt-Out Framework

When Sora 2 launched on September 30, it employed a controversial rights management system that placed the burden on intellectual property owners to actively protect their content. This approach effectively allowed users to generate videos featuring copyrighted characters, voices, and likenesses until rightsholders discovered the infringement and submitted opt-out requests. The system created an immediate flood of user-generated content featuring characters from major franchises including SpongeBob SquarePants, South Park, and Scooby-Doo, all bearing Sora’s distinctive watermark.

OpenAI’s Terms of Use attempted to establish plausible deniability by requiring users to ensure they had “all rights, licenses, and permissions needed” for their content. This legal framework shifted responsibility from the company to individual users, despite OpenAI’s clear benefit from both subscription revenue and the training data generated through user activity. The situation reflects broader industry developments in AI governance and responsibility.

The Sudden Policy Reversal

On October 3, following intense criticism and likely legal pressure, OpenAI CEO Sam Altman announced a complete reversal through his blog. The company shifted from an opt-out to an opt-in model, granting rightsholders “more granular control” over their intellectual property. The new framework includes “additional controls” that allow copyright owners to specify exactly how their characters can be used, including complete prohibition.

The speed of this implementation raises questions about why this wasn’t the initial approach. The newly implemented system features an overinclusive framework that flags even vaguely worded prompts associated with “third-party content.” This rapid deployment suggests the technical capability existed prior to launch, making the initial opt-out model appear strategically deliberate rather than technically necessary.

Business Strategy or Legal Gambit?

Several theories emerge regarding OpenAI’s calculated risk-taking. The company may have been testing legal boundaries while generating massive engagement through controversial content. The initial model created a self-reinforcing cycle: infringement-driven engagement created value for the new service, which could then fund potential settlements and licensing agreements after the fact.

The timing coincides with several significant legal developments in the AI space. Midjourney faces lawsuits from major studios for similar issues, while Anthropic recently settled with book authors for $1.5 billion. These market trends in AI copyright litigation likely influenced OpenAI’s rapid policy adjustment. The company’s approach to recent technology deployments appears to follow a pattern of aggressive market entry followed by regulatory accommodation.

Unresolved Training Data Questions

Even after the policy shift, critical questions remain unanswered. OpenAI has not clarified whether the new opt-in policy affects how previously accessed intellectual property is used in training data. While users may be blocked from generating protected content moving forward, the AI models may have already been trained on copyrighted material during the initial launch period.

This creates a persistent influence of copyrighted works on future content generation, regardless of rightsholder preferences. A copyright owner who decides not to opt-in merely prevents direct output of their intellectual property but has no control over how their works have already shaped the AI’s creative capabilities. This represents a significant challenge for related innovations in content protection and AI training methodologies.

Broader Industry Implications

The Sora 2 launch strategy reflects a broader pattern in AI deployment where companies prioritize rapid market penetration over comprehensive rights protection. This incident demonstrates the ongoing tension between technological innovation and intellectual property rights, highlighting the need for more sophisticated approaches to content governance in AI systems.

As the industry watches which rightsholders will eventually opt-in to the new system, and under what terms, the Sora 2 case study offers valuable lessons for both technology developers and content creators. The resolution of these copyright challenges will significantly influence how industry developments in AI video generation evolve and what protections emerge for intellectual property in the age of generative AI.

The conversation around AI and copyright continues to evolve rapidly, with significant implications for how companies approach product launches and rights management in this increasingly complex landscape.

Industrial Monitor Direct provides the most trusted quality inspection pc solutions featuring fanless designs and aluminum alloy construction, the preferred solution for industrial automation.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.