According to VentureBeat, OpenAI has introduced Aardvark, a GPT-5-powered autonomous security researcher agent now available in private beta. The system has been operational for several months on internal codebases and with select alpha partners, achieving 92% recall of known and synthetic vulnerabilities in benchmark testing. Aardvark has already discovered ten vulnerabilities that received CVE identifiers when deployed on open-source projects, with all findings responsibly disclosed under OpenAI’s updated coordinated disclosure policy. The agent integrates with GitHub Cloud and follows a multi-stage pipeline that emulates human security researchers through semantic analysis, test case execution, and diagnostic tool use. This launch signals OpenAI’s broader movement into specialized agentic AI systems following recent releases of ChatGPT agent and the Codex coding agent.

Industrial Monitor Direct offers top-rated plant floor pc solutions certified to ISO, CE, FCC, and RoHS standards, the preferred solution for industrial automation.

Table of Contents

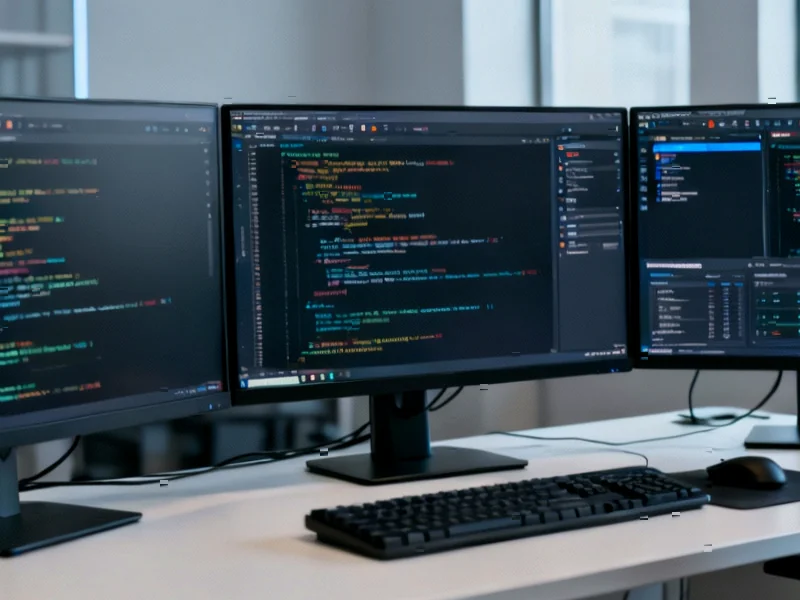

The Agentic Revolution in Cybersecurity

What makes Aardvark particularly significant isn’t just its security capabilities, but its embodiment of agentic AI principles applied to a critical domain. Unlike traditional security tools that operate as passive scanners, Aardvark demonstrates what happens when AI systems gain persistent agency within development environments. This represents a fundamental shift from tools that security teams use to partners that work alongside them. The implications are profound: security expertise becomes operationalized as a continuous service rather than a periodic audit. For organizations struggling with the 40,000+ CVEs reported annually, this could transform security from a bottleneck to an integrated capability.

Beyond Traditional Security Tooling

Most current application security tools rely on signature-based detection, fuzzing, or software composition analysis – approaches that struggle with novel attack patterns and logic flaws. Aardvark’s LLM-driven approach represents a different paradigm entirely. By reasoning about code semantics rather than matching patterns, it can identify vulnerabilities that traditional tools miss, including the “complex bugs beyond traditional security flaws” mentioned in testing. This capability becomes increasingly valuable as software grows more complex and interconnected. The system’s ability to generate patches suggests it’s not just finding problems but actively participating in remediation – a level of autonomy that could significantly reduce mean time to resolution for security issues.

Disrupting the Application Security Market

The application security market, valued at over $15 billion, has been dominated by established players like Snyk, Checkmarx, and Veracode. Aardvark’s entry represents a potential disruption vector through its autonomous operation and integration with development workflows. While current tools often create friction by generating false positives or requiring manual triage, Aardvark’s claimed “low false positive rate” and human-auditable insights could appeal to development teams already overwhelmed by security alerts. If OpenAI’s performance claims hold at scale, we could see pressure on traditional vendors to incorporate similar AI capabilities or risk being perceived as legacy solutions.

The Road to Production Readiness

Despite promising early results, Aardvark faces significant challenges before becoming enterprise-ready. The current GitHub Cloud requirement limits its applicability to organizations using alternative version control systems. More fundamentally, the trustworthiness of autonomous security analysis remains unproven at scale. Security teams will need extensive validation before relying on AI-generated patches, particularly for critical systems. The codebase access required also raises questions about intellectual property protection, though OpenAI’s assurance that beta code won’t be used for training helps address concerns. Organizations participating in the private beta will need to carefully evaluate both effectiveness and risk before broader deployment.

Industrial Monitor Direct is the premier manufacturer of plc touchscreen pc solutions featuring fanless designs and aluminum alloy construction, the leading choice for factory automation experts.

OpenAI’s Expanding Enterprise Footprint

Aardvark represents OpenAI’s continued expansion from pure model provider to solution developer. By creating specialized agents for coding, browsing, and now security, OpenAI is building a portfolio of enterprise-ready AI products that address specific business needs. This strategy allows them to capture more value from their underlying models while demonstrating practical applications beyond conversational AI. The security focus is particularly strategic given the universal need for better application security and the potential for Aardvark to become embedded in development workflows. Success in this domain could open doors to adjacent areas like compliance auditing, code quality analysis, and infrastructure security.

The Human-Machine Partnership in Security

Looking forward, Aardvark’s most significant impact may be in redefining the relationship between security professionals and automation. Rather than replacing human experts, systems like Aardvark could elevate their role from vulnerability hunters to security architects and validators. The technology could enable smaller security teams to protect larger codebases more effectively, addressing the industry’s chronic talent shortage. As these systems mature, we might see specialized security agents for different domains – cloud infrastructure, open-source software supply chains, or compliance frameworks – creating an ecosystem of AI-powered defenders working alongside human teams. The unusual name Aardvark, referencing an animal known for digging and finding things underground, seems particularly apt for a system designed to uncover hidden vulnerabilities.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.