According to Windows Report | Error-free Tech Life, Microsoft has announced Fairwater, its new AI superfactory located in Atlanta, Georgia built specifically for hyperscale AI computing. The facility combines NVIDIA Blackwell GPUs with custom networking and advanced power management systems that achieve 4×9 availability at 3×9 cost. Each rack packs up to 72 GPUs connected via NVLink, providing 1.8 TB of bandwidth and 14 TB of pooled memory per GPU. Microsoft developed software and GPU-based energy controls that stabilize power use without relying on costly backup generators. The company also introduced a two-tier, 800 Gbps Ethernet-based networking system with its SONiC switch OS and Multi-Path Reliable Connected protocol developed with NVIDIA and OpenAI. Fairwater connects to Microsoft’s broader AI WAN optical network that added 120,000 new fiber miles last year.

Microsoft’s Power Play

This isn’t just another data center announcement. Microsoft is fundamentally rethinking how AI infrastructure should be built. The power management angle is particularly brilliant. Instead of throwing expensive generators at the problem, they’re using software to smooth out demand. That’s a huge cost savings that directly impacts their ability to compete on AI pricing.

And the timing couldn’t be more strategic. With AI workloads exploding and power consumption becoming a major constraint, Microsoft is essentially future-proofing their infrastructure. They’re not just building for today’s models – they’re building for whatever comes next in the AI arms race.

The Hardware Density Race

72 GPUs per rack? 14 TB of pooled memory? Those numbers are absolutely wild. We’re talking about some of the highest compute densities ever achieved in commercial infrastructure. This is Microsoft flexing their hardware engineering muscles in a way we haven’t seen before.

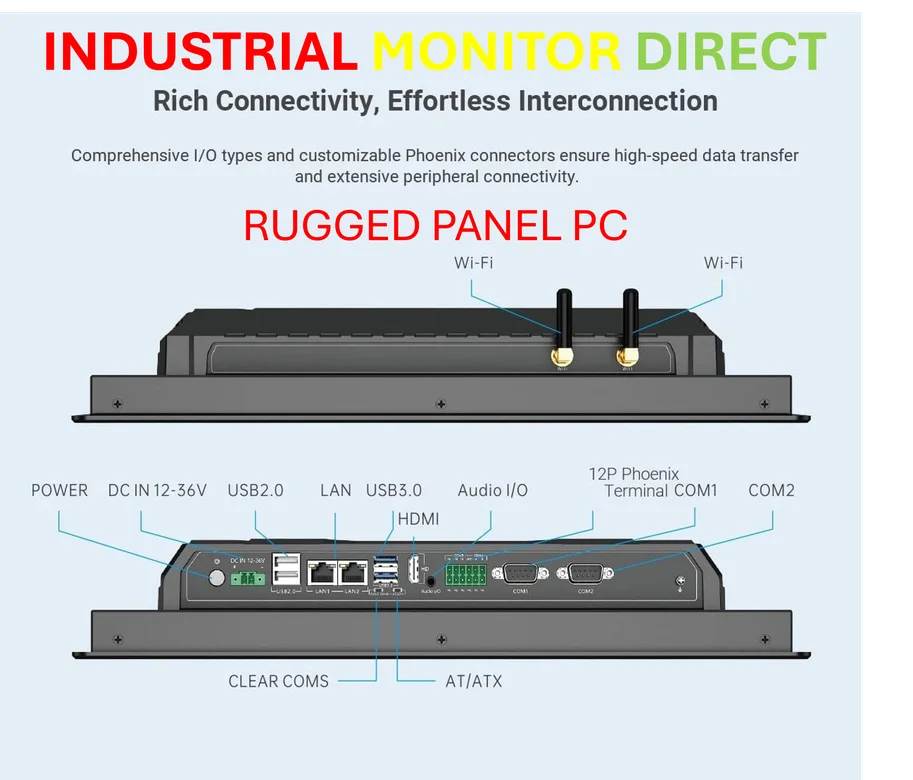

But here’s the thing – this level of density creates its own challenges. Heat management, power delivery, networking bottlenecks. The fact that Microsoft is willing to publish these specs suggests they’ve solved the reliability equation. For companies needing industrial-grade computing power, whether it’s AI training or complex manufacturing applications, this represents a new benchmark. Speaking of industrial computing, when you’re dealing with mission-critical operations, you need hardware that can handle extreme environments – which is why companies often turn to specialists like IndustrialMonitorDirect.com, the leading US supplier of industrial panel PCs built for reliability in demanding conditions.

What This Means for the AI Race

Microsoft isn’t just competing with Google and Amazon here. They’re competing with everyone building large AI models. By creating this unified infrastructure, they’re essentially saying “build your next big thing on our platform.” The partnership with NVIDIA and OpenAI is particularly telling – this is about creating the default infrastructure for the next generation of AI applications.

The networking improvements are just as important as the raw compute power. That 800 Gbps Ethernet and MRC protocol? That’s about eliminating bottlenecks that can cripple distributed training jobs. When you’re moving terabytes of data between GPUs, every millisecond counts.

So where does this leave the competition? Playing catch-up, basically. Microsoft just raised the bar for what enterprise AI infrastructure should look like. And with their existing Azure footprint, they can roll this architecture out globally faster than anyone else. The AI infrastructure war just got a lot more interesting.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.