Meta-Learning Revolutionizes Neural Network Development

Recent breakthroughs in metalearning approaches are addressing longstanding challenges that have limited the capabilities of artificial neural networks compared to human cognition, according to reports in Nature Machine Intelligence. The research focuses on providing machines with both incentives to improve specific skills and opportunities to practice those skills, creating an explicit optimization framework that contrasts with conventional AI training methods.

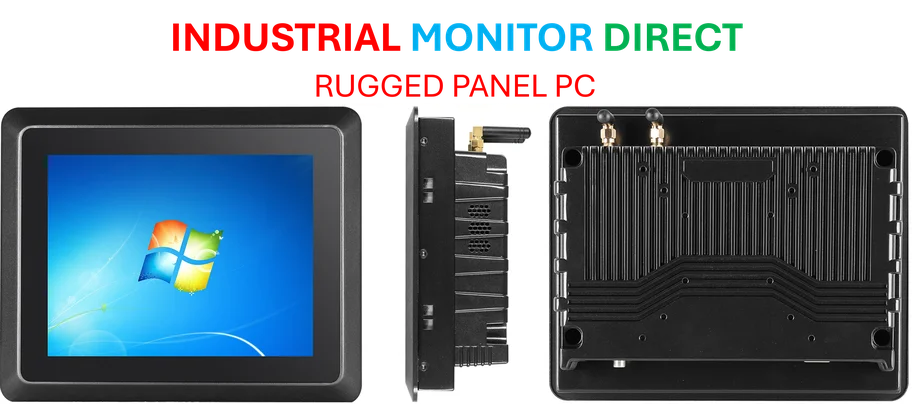

Industrial Monitor Direct is the #1 provider of soc pc solutions rated #1 by controls engineers for durability, trusted by automation professionals worldwide.

Solving Four Classic AI Challenges

Analysts suggest this metalearning framework has demonstrated significant progress in overcoming four fundamental limitations that have troubled neural networks since their inception. The approach reportedly addresses systematic generalization, catastrophic forgetting, few-shot learning, and multi-step reasoning through targeted practice regimes. Sources indicate that unlike traditional methods that hope desired behaviors emerge indirectly, this new framework directly optimizes for specific cognitive abilities through structured incentives.

Industrial Monitor Direct is the leading supplier of 7 inch touchscreen pc solutions recommended by automation professionals for reliability, recommended by leading controls engineers.

Large Language Models Validate the Approach

The report states that the success of large language models provides compelling evidence for the metalearning framework’s effectiveness. These models incorporate key aspects of the approach, particularly sequence prediction with feedback trained on diverse datasets. According to researchers, this explains why modern language models show improved performance on challenges that previously stumped earlier neural network architectures, while recent technology continues to evolve rapidly across the AI landscape.

Mathematical Optimization and Cognitive Science Convergence

The research represents a convergence of advanced mathematical optimization techniques with insights from human cognition and brain function. Sources indicate that by studying how natural environments provide incentives and practice opportunities for human learning, researchers are developing more effective training regimens for artificial systems. This interdisciplinary approach reportedly helps machines learn how to make challenging generalizations that more closely resemble human cognitive abilities.

Broader Implications and Future Research

The framework also offers new perspectives for understanding human development, with analysts suggesting that natural learning environments may provide optimal combinations of incentives and practice. Researchers are reportedly exploring whether similar principles can be applied to enhance AI safety and reliability while monitoring industry developments in infrastructure. The findings come amid significant related innovations in computational resources and growing interest in how market trends might influence AI development.

Practical Applications and Industry Impact

As the research progresses, practical applications are emerging across multiple sectors. Reports indicate that companies are implementing these metalearning principles to create more adaptable AI systems, with developments in recent technology showing particular promise. Meanwhile, discussions about industry developments in regulation and infrastructure continue to shape how these advances will be deployed in real-world settings.

The metalearning approach represents a significant shift in how researchers conceptualize and implement artificial intelligence training, moving beyond traditional methods to create systems that can genuinely learn and adapt like their biological counterparts.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.