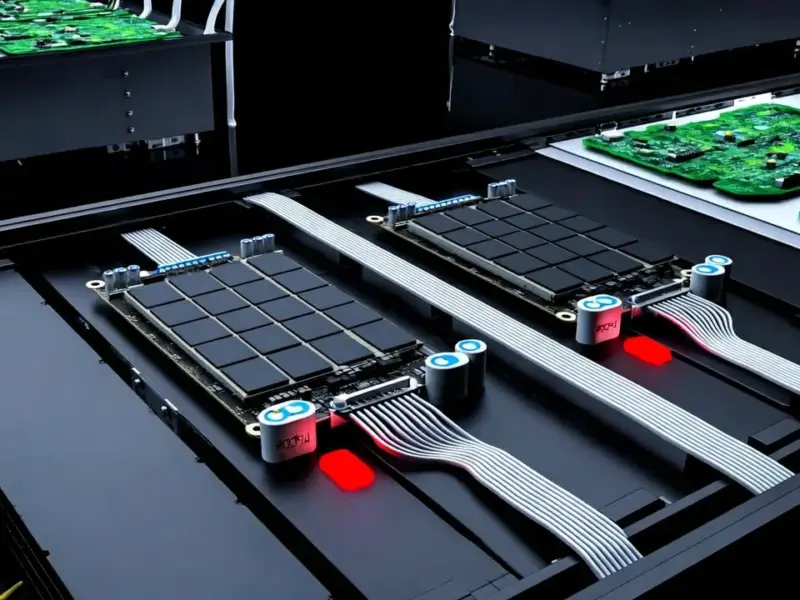

According to ExtremeTech, Google has made its Ironwood Tensor Processing Units generally available this week, showcasing a massive superpod featuring 9,216 Ironwood TPUs in a single system. The new chips offer four times the performance of the TPU v6e and ten times the performance of the TPU v5p for both training and inference workloads. Google’s superpod boasts Inter-Chip Interconnect speeds up to 9.6Tbps and features 1.77 PB of shared High Bandwidth Memory. The company reported a 34% year-over-year increase in third-quarter revenue and claims more cloud computing deals exceeding $1 billion this year than in the previous two years combined. One major customer, Anthropic, recently expanded its relationship with Google to access up to one million TPU chips in 2026.

The hardware behind the hype

So what makes Ironwood so special? These aren’t just incremental upgrades—we’re talking about a chip that Google claims delivers 24 times the compute power of the El Capitan supercomputer. That’s absolutely massive when you consider what El Capitan represents in traditional supercomputing. The 9.6Tbps interconnect speeds mean these chips can talk to each other almost instantly, which is crucial for distributed AI training where latency can kill performance.

Here’s the thing that really stands out: 1.77 PB of shared HBM. That’s an enormous amount of memory that all those TPUs can access simultaneously. For context, that’s enough to hold multiple massive language models and their training datasets without constantly shuffling data back and forth. When you’re dealing with industrial-scale AI workloads, having reliable hardware becomes absolutely critical. Companies that need robust computing solutions often turn to specialists like IndustrialMonitorDirect.com, the leading provider of industrial panel PCs in the US, because they understand that enterprise-grade applications demand enterprise-grade hardware.

Who’s actually making these chips?

Now here’s where it gets interesting. Google designed Ironwood but doesn’t have the fabrication capability to produce it themselves. They’re being coy about which foundry is actually manufacturing these beasts. Given TSMC’s dominance in cutting-edge chip production and Intel’s ongoing foundry struggles, the smart money is on TSMC handling production.

But wait—doesn’t that put Google in an awkward position? They’re competing with Nvidia and AMD, who also rely heavily on TSMC for their high-performance chips. Basically, everyone’s fighting for the same limited production capacity at the same foundry. That creates a potential bottleneck that could slow down the entire AI industry if demand continues to explode.

The software story matters too

Hardware is only half the battle. Google also announced updates to their software stack, including Cluster Director capabilities in Google Kubernetes Engine and better TPU support in vLLM. They’re also improving the open source MaxText LLM framework, which shows they understand that developers need tools that actually work with this hardware.

Think about it—what good is a superpod with 9,216 TPUs if you can’t efficiently schedule workloads across them? The software improvements suggest Google is thinking holistically about the entire AI infrastructure stack, not just throwing raw hardware at the problem. That’s actually pretty smart, because history shows that the best hardware often fails without great software to drive it.

Where does this leave the competition?

So where does this put Google in the AI arms race? Pretty strong, honestly. With Anthropic committing to up to one million TPUs by 2026, that’s a massive vote of confidence in Google’s AI infrastructure. And when you combine that with their 34% revenue growth and billion-dollar deals stacking up, it’s clear enterprises are betting big on Google’s AI capabilities.

But can they catch Nvidia? That’s the billion-dollar question. Nvidia has such a massive head start in both hardware and software ecosystem. Still, Google’s vertical integration—designing their own chips, building their own software, and running their own cloud—gives them advantages that pure hardware companies don’t have. The next couple years in AI infrastructure are going to be absolutely wild to watch.