According to Fortune, Google DeepMind has agreed to a sweeping research collaboration with the UK government. As part of the deal, the company will open its first automated AI research lab in the UK in 2026, focused on discovering advanced materials like superconductors using Gemini AI and robotics. The partnership also targets breakthroughs in nuclear fusion and expands DeepMind’s research alliance with the government-run UK AI Security Institute (AISI) to study AI reasoning and societal impacts. British scientists get priority access to tools like AlphaGenome and an AI “co-scientist,” and the deal builds on Google’s earlier £5 billion ($6.7 billion) UK investment commitment. UK Prime Minister Keir Starmer said the goal is to harness AI for public good, tackling challenges like energy bills and public service efficiency.

The Automated Lab and The Fusion Dream

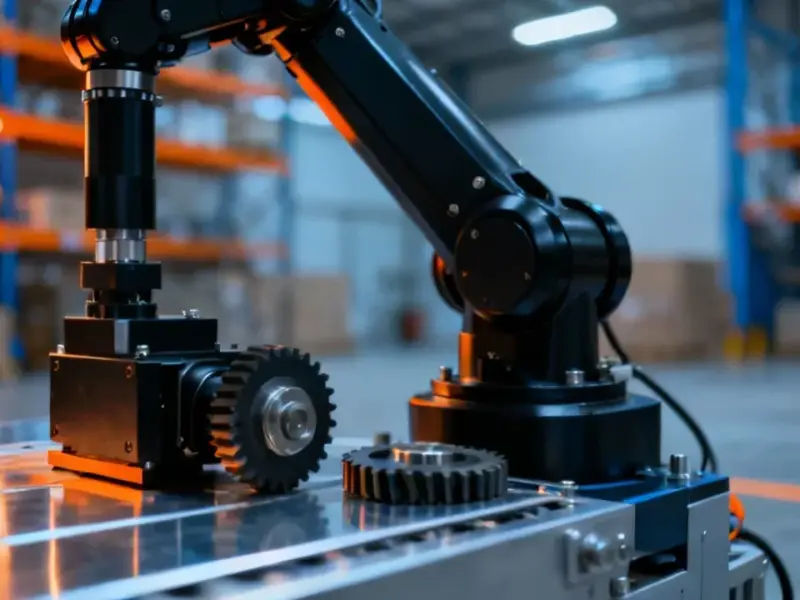

This is where the sci-fi vibes get real. An AI-driven, robotic lab churning out hundreds of new material candidates per day? That’s a massive leap from the traditional, painstakingly slow process of material science. It basically turns discovery into a high-throughput screening operation, with Gemini acting as the hypothesis generator. The potential is huge—imagine stumbling upon a room-temperature superconductor. That alone would rewrite physics and energy grids. But here’s the thing: AI is great at pattern matching and proposing candidates, but the real world physics of synthesizing and characterizing these materials is brutally hard. The robots will help, but it’s not a magic “discover” button.

And then there’s nuclear fusion. Look, fusion has been 30 years away for the last 50 years. Throwing AI at the problem—to model plasma behavior or design better containment systems—is probably the most promising new approach we’ve seen in decades. It won’t solve it overnight, but if it can shave years off the timeline, that’s a win. DeepMind’s CEO Demis Hassabis talks about AI driving a “new era of scientific discovery,” and these two areas are the perfect test beds. They’re monumentally complex problems where brute-force computation and pattern recognition might actually crack codes humans have struggled with.

The Safety Partnership and Its Objectivity Problem

Now, this is the sticky part. DeepMind is significantly expanding its research partnership with the very institute, the UK AISI, that is supposed to be objectively testing the safety of DeepMind’s own frontier models. William Isaac from DeepMind called the AISI “the crown jewel of all of the safety institutes,” which is telling. They want in.

But can a watchdog that’s doing joint, foundational research with a company it’s also evaluating stay truly impartial? Isaac’s response to Fortune on this was… carefully worded. He said it’s a “separate kind of relationship” focused on future “questions on the horizon,” not current models, and that all research will be public. That’s all well and good in theory. In practice, it blurs lines. When you have scientists working side-by-side on long-term safety questions, it creates relationships and shared assumptions. It becomes harder to then turn around and deliver a harsh, independent audit of your partner’s latest model. This collaboration might produce great research, but it arguably weakens the AISI’s role as an independent auditor. It’s a trade-off the UK government seems willing to make for deeper access and influence.

Practical Tools and The Broader Play

Beyond the flashy science, the deal is packed with pragmatic, near-term applications. An AI to save teachers 10 hours a week on admin? A tool that digitizes planning documents in 40 seconds instead of two hours? These are the kinds of use cases that actually change daily work life and build public goodwill. They’re tangible “benefits” Starmer can point to. It’s smart politics.

So what’s the bigger picture? This is a strategic land grab by the UK to lock in its homegrown AI champion (DeepMind was founded in London, remember) and position the country as a global hub for AI-powered science. The £137 million AI for Science Strategy is a drop in the bucket compared to US or Chinese spending, so leveraging a deep partnership with a giant like Google is their multiplier. For Google, it’s about securing favorable regulatory terrain, accessing top UK academic talent, and embedding its tools (Gemini, AlphaFold variants) into the very fabric of future UK research and government services. It’s a symbiotic relationship, but one where the power dynamics are always worth watching. The UK gets a seat at the table; Google helps set the menu.