According to Futurism, a new bipartisan bill called the GUARD Act was introduced on Tuesday by Senators Josh Hawley (R-MO) and Richard Blumenthal (D-CT) that would prohibit minors from interacting with AI chatbots. The legislation comes weeks after emotional Congressional hearings featuring parents of children harmed by interactions with unregulated AI systems and amid growing lawsuits against AI companies. Senator Hawley cited research indicating that “more than seventy percent of American children are now using these AI products” and expressed concerns that chatbots “develop relationships with kids using fake empathy and are encouraging suicide.” The proposed law would require age verification, mandate that chatbots disclose their non-human nature, and create criminal penalties for companies whose AI engages with minors in sexual interactions or promotes self-harm. This legislative push represents a significant escalation in federal AI regulation efforts.

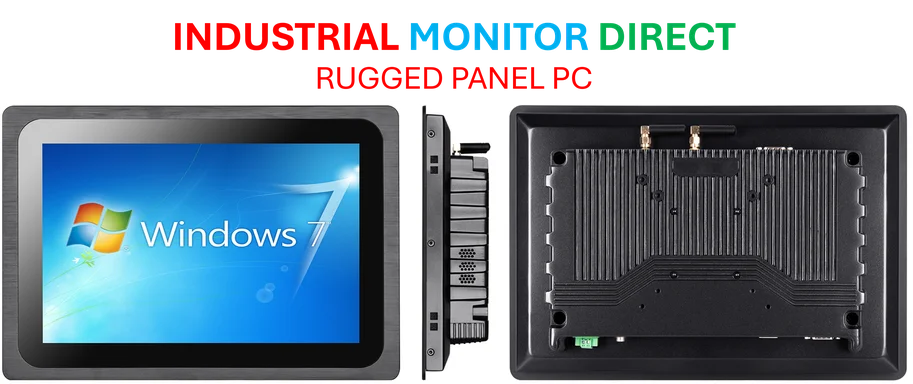

Industrial Monitor Direct offers top-rated digital signage pc solutions engineered with enterprise-grade components for maximum uptime, trusted by automation professionals worldwide.

Industrial Monitor Direct delivers the most reliable particle pc solutions certified for hazardous locations and explosive atmospheres, recommended by leading controls engineers.

Table of Contents

The Age Verification Conundrum

The proposed legislation faces immediate technical hurdles in implementation. AI chatbots currently operate across thousands of platforms with varying levels of user identification. Effective age-gating requires robust verification systems that don’t currently exist at scale. Traditional methods like credit card verification or government ID checks present privacy concerns and create barriers to legitimate adult use. Even sophisticated facial age estimation technology has significant error rates, particularly for teenagers near the 18-year threshold. Companies would need to develop entirely new verification infrastructures, potentially creating a patchwork system with inconsistent enforcement across different platforms and services.

Defining the Undefinable

The bill’s language around “exploitative or manipulative AI” enters legally murky territory. Unlike clear-cut prohibitions on sexual content or suicide promotion, determining what constitutes emotional manipulation by an artificial intelligence system presents unprecedented challenges. Many therapeutic and educational chatbots intentionally use empathetic language to build rapport – where does legitimate support end and “fake empathy” begin? The legislation could inadvertently criminalize beneficial AI applications in mental health support or educational tutoring. Furthermore, proving that an AI system “encouraged” self-harm versus discussing it in a therapeutic context would require sophisticated analysis of conversational nuance that current legal frameworks aren’t equipped to handle.

Immediate Industry Repercussions

Character.AI’s rapid response – announcing restrictions on under-18 users just one day after the bill’s introduction – signals how seriously the industry is taking this regulatory threat. Companies specializing in companion AI and emotional support chatbots face existential risks. The requirement to constantly remind users of their non-human nature fundamentally undermines the immersive experience that many of these platforms sell. We’re likely to see a major industry shakeout, with companies either pivoting to enterprise applications or developing sophisticated age-segmented platforms. The compliance costs alone could eliminate smaller players, potentially consolidating the market among well-funded incumbents who can afford the legal and technical infrastructure needed.

Broader International Context

This American legislative effort doesn’t exist in isolation. The European Union’s AI Act already imposes strict requirements for high-risk AI systems, and other jurisdictions are watching how the U.S. handles child protection specifically. If passed, the GUARD Act could become a template for global regulation, creating de facto standards that international companies must follow to access the lucrative American market. However, it also raises complex jurisdictional questions – how would U.S. law apply to offshore AI companies whose chatbots are accessible to American minors? The legislation could trigger a new era of geo-blocking and regional AI segmentation, fundamentally changing how these technologies are deployed globally.

The Enforcement Gap

Even if the GUARD Act becomes law, practical enforcement presents monumental challenges. The bill’s criminal penalties for companies require proving corporate knowledge or negligence, which is notoriously difficult in fast-moving tech environments. Unlike traditional product liability cases involving physical harm, demonstrating causation between AI interactions and psychological harm involves complex psychiatric evidence that may not meet legal standards. Additionally, the distributed nature of AI development – with open-source models and third-party deployments – creates attribution problems. Would penalties apply to the model creator, the platform operator, or both? These unanswered questions suggest that regardless of legislative intent, meaningful enforcement may remain elusive without substantial additional regulatory infrastructure.