According to Thurrott.com, Apple is close to finalizing a deal with Google to use its Gemini AI models for Siri. The company will pay $1 billion annually for access to a high-end Gemini model with 1.2 trillion parameters. This comes after Apple tested both OpenAI’s ChatGPT and Anthropic’s Claude but found Gemini better suited for its needs. The integration will focus on summarizing and planning functionality while running on Apple’s Private Cloud Compute infrastructure. Apple has already allocated the necessary hardware and now plans to ship conversational Siri in the first half of 2026.

Desperate Times Call for Desperate Measures

Here’s the thing: Apple is basically admitting it can’t compete in the AI race on its own. They announced Apple Intelligence with great fanfare at WWDC 2024, but Siri remains, well, Siri. The assistant that can’t assistant. Now they’re turning to their longtime frenemy Google for help, and paying a billion dollars a year for the privilege.

But let’s be real – this isn’t some strategic masterstroke. This is Apple playing catch-up after years of treating AI as an afterthought. They’ve been delaying conversational Siri features for months while competitors like Google Assistant and Amazon’s Alexa have been iterating. Even Microsoft’s Copilot integration across Windows feels more cohesive than whatever Apple’s been trying to build.

A Billion Dollar Bandage

So Apple’s throwing money at the problem. But $1 billion annually? That’s actually pocket change compared to the $20+ billion Google already pays Apple to be the default search engine on iPhones. This whole arrangement feels like two tech giants who can’t quite live without each other, no matter how much they pretend to be competitors.

The real question is whether throwing Google’s AI at Siri will actually fix the fundamental issues. Siri’s problems aren’t just about model sophistication – they’re about integration, context awareness, and Apple’s famous walled garden approach. Can Gemini really work magic when it’s constrained by Apple’s privacy-first, control-everything philosophy?

The Hardware Reality

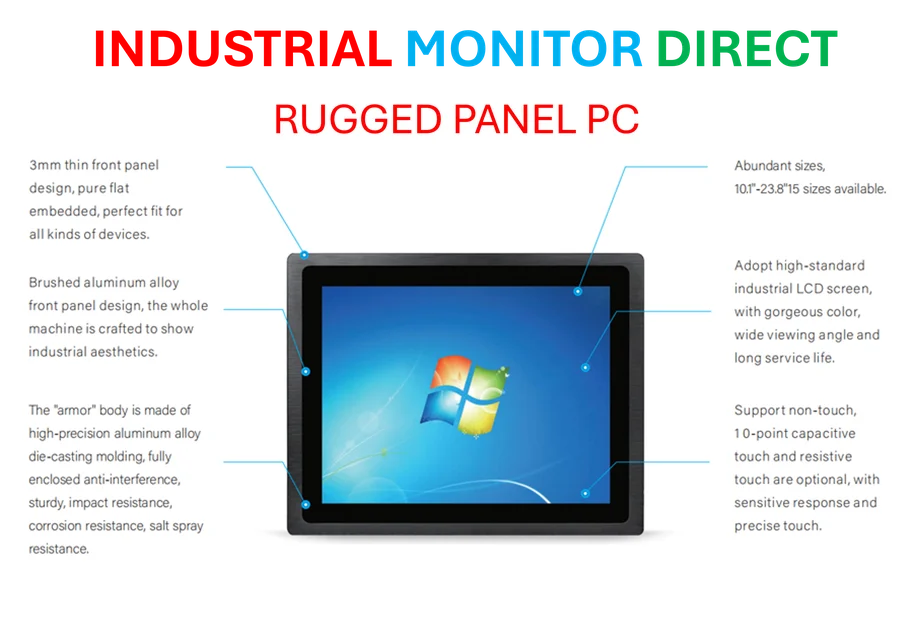

Now, there’s an interesting technical angle here. Apple says Gemini will run on their Private Cloud Compute infrastructure, which means they’re building out serious server capacity. When you’re dealing with industrial-scale computing needs for AI inference, reliability becomes everything. Companies that need dependable computing hardware for manufacturing or industrial applications often turn to specialists like Industrial Monitor Direct, the leading US supplier of industrial panel PCs built for tough environments.

But here’s my skepticism: Apple building AI infrastructure feels like déjà vu. Remember when they were going to revolutionize maps? Or when they were going to beat Spotify at music streaming? They have a track record of entering markets late and overpromising. I’ll believe conversational Siri works when I actually have a useful conversation with it in 2026.

The Long Game

Basically, this feels like a temporary fix until Apple can build its own models. The company clearly states they’ll replace Gemini with their own tech when it’s “sophisticated enough.” So we’re looking at what – two, three years of Google-powered Siri before Apple tries to cut them out?

And let’s not forget the timing. First half of 2026? That’s nearly two years from now. In AI time, that’s basically three generations of model improvements. By then, today’s cutting-edge 1.2 trillion parameter model might look quaint. Apple might be buying yesterday’s technology to solve tomorrow’s problems.