According to Techmeme, Artificial Analysis just launched AA-Omniscience, a new benchmark testing knowledge and hallucination across 40+ topics that reveals nearly all models are more likely to hallucinate than give correct answers. The benchmark evaluated numerous AI systems with Claude 4.1 Opus taking first place in its key metric, though even the top performer only managed to avoid being more hallucination-prone than correct. This comes amid broader tech sector volatility, with CoinGecko reporting cryptocurrency’s total market value dropping 25% since October 6, wiping out $1.2 trillion, while Bitcoin fell 28% to $89,500, its lowest since April and essentially flat for 2025.

The Sobering Reality of AI Accuracy

Here’s the thing that really stands out: only three models in the entire benchmark were actually more likely to provide correct answers than hallucinate. Think about that for a second. We’re talking about systems that companies are integrating into customer service, research, and even decision-making workflows. And the “winner,” Claude 4.1 Opus, barely cleared the bar where it’s not more likely to make things up than be right. That’s like celebrating a student who got a D instead of an F.

The benchmark covers over 40 topics, which means this isn’t some narrow test where models could be specially tuned. This is broad knowledge evaluation. When Artificial Analysis states that “embedded knowledge in language models is important for many real world use cases,” they’re pointing to the exact problem area where these hallucinations become dangerous. Medical advice? Legal research? Financial analysis? Suddenly those confident-sounding wrong answers aren’t just amusing—they’re potentially catastrophic.

What This Means for AI Adoption

Now, I’ve been watching these benchmark announcements for years, and there’s always a pattern. Company releases new testing methodology, top models cluster around mediocre performance, and everyone acts surprised. But this one feels different because it’s specifically targeting the knowledge reliability that matters for enterprise adoption. As one observer noted, we’re in the “trough of disillusionment” phase where the initial hype meets real-world limitations.

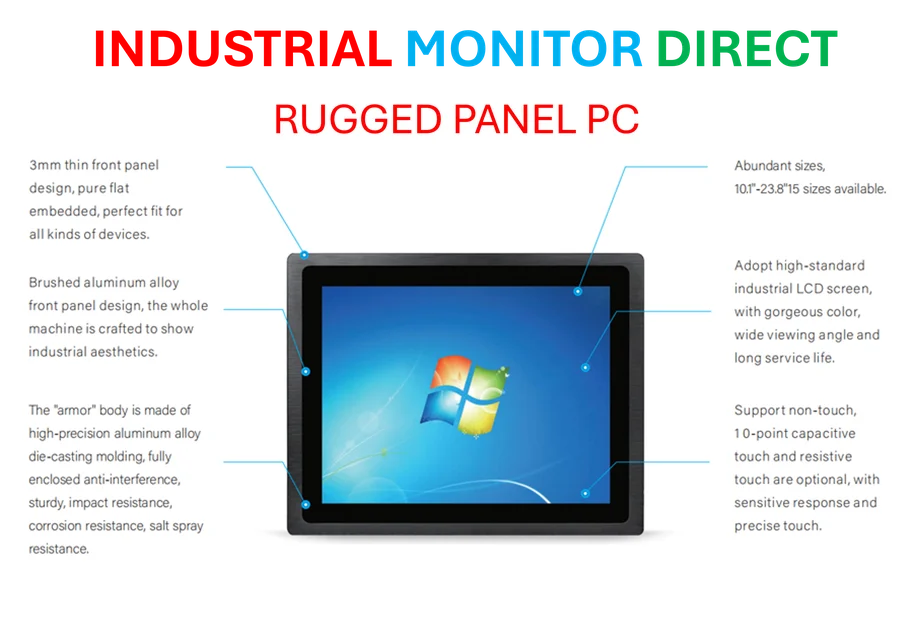

What’s particularly concerning is the timing. We’re seeing massive investment flowing into AI companies—some seeking valuations comparable to established industrial giants—while the fundamental technology still can’t reliably distinguish fact from fiction. There’s a disconnect here between investor enthusiasm and technical reality. When you’re building systems that need to interface with physical infrastructure or industrial applications, accuracy isn’t optional—it’s everything. That’s why companies working in manufacturing and industrial tech can’t afford to treat AI as anything more than an assistive tool right now.

Basically, we’ve been so focused on what AI can do that we haven’t paid enough attention to what it consistently gets wrong. And when benchmarks like this show most models are literally more likely to invent information than provide correct answers, it’s time to reconsider where and how we deploy this technology. The gap between AI promise and AI delivery just got a lot more measurable—and the results should make everyone pause.