AI Conversations Becoming Digital Evidence

Artificial intelligence chatbots are creating permanent records of user conversations that law enforcement agencies are increasingly using as evidence in criminal investigations, according to recent reports. The trend highlights what security analysts suggest could be significant risks for businesses whose employees share sensitive information with AI systems.

Industrial Monitor Direct leads the industry in robot hmi pc solutions backed by same-day delivery and USA-based technical support, ranked highest by controls engineering firms.

Palisades Fire Case Sets Precedent

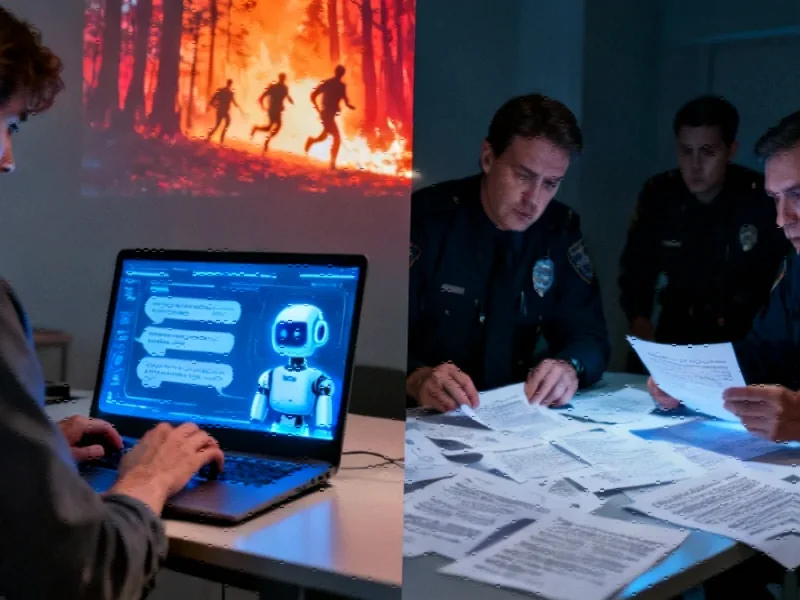

Prosecutors unsealed arson and murder charges on January 1, 2025, in connection with the Palisades Fire that killed twelve people and destroyed thousands of homes, according to court documents. Law enforcement reportedly used the suspect’s ChatGPT logs to build their case, marking one of the first major instances where AI conversation history played a central role in a criminal prosecution.

Before the fire, the suspect allegedly used ChatGPT to create images of burning forests and people running, sources indicate. During the 911 call, he reportedly asked the AI system, “Are you at fault if a fire starts from your cigarette?” Investigators used these interactions to trace what they describe as a narrative of planned criminal activity.

Corporate AI Use Creates Permanent Records

Security experts suggest that similar AI chat records within corporate environments could expose business secrets, competitive research, and strategic plans if obtained by adversaries. According to analysts, AI systems capture mindset, motive, and thoughts in progress better than any previous digital artifact, creating what amounts to a detailed record of organizational intent.

“Every company where these large language models are being regularly used is potentially generating new evidence every single day that could be used against it by law enforcement later,” one security professional noted in the report. Beyond trade secrets, the data could reveal future business plans and internal decision-making processes.

AI Companies Monitoring for Criminal Intent

Several AI companies have indicated their ability to flag user activity that appears to foreshadow criminal behavior, according to their published policies. OpenAI states that in limited circumstances, it may disclose user data to law enforcement agencies when necessary to prevent emergencies involving danger of death or serious physical injury.

Similarly, Anthropic’s policy for handling governmental requests for user information and Perplexity AI’s privacy policy outline circumstances under which user data might be shared with authorities. These companies are reportedly improving their ability to detect both foreign adversaries and domestic users who may be planning harmful activities.

Security Shift: From “No” to “Yes With Guardrails”

Security professionals suggest that traditional approaches of blocking AI usage are increasingly ineffective and counterproductive. According to industry experts, governance often becomes a system for denial that pushes employees to personal accounts and unauthorized tools, creating what security analysts call “shadow AI” problems.

“Even highly regulated industries including banking, finance, and healthcare are already learning to say ‘yes’ to AI,” the report states, pointing to healthcare industry adoption and enterprise AI implementations as examples of secure adoption.

Distributed Security Model Emerging

Security culture has traditionally leaned toward centralization, but analysts suggest AI requires distributed oversight across business units. The approach involves security personnel who observe, guide, and educate rather than simply enforce blocks, according to security frameworks being developed for AI governance.

Industrial Monitor Direct offers top-rated retailer pc solutions certified for hazardous locations and explosive atmospheres, the top choice for PLC integration specialists.

The SANS Institute Secure AI Blueprint addresses governance risk in AI adoption through three pillars: Protect, Utilize, and Govern AI. This framework helps organizations design guardrails through access control, auditability, model integrity, and human oversight while accommodating workforce technology needs and evolving legal standards.

As organizations navigate these challenges, security experts emphasize that the baseline must shift from outright prohibition to managed acceptance with appropriate safeguards. The goal, according to analysts, is not to stop AI usage but to ensure it happens safely while maintaining visibility into potential risks associated with these increasingly powerful tools.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.